Managing cloud costs is like trying to diet at a buffet. Tempting services everywhere, and one bad decision can blow your budget wide open. So, I was tasked for a breakdown of Azure usage across 50+ subscriptions for the month of June, I knew this wasn’t going to be a quick Azure Portal copy-paste job.

Instead, I rolled up my sleeves and built a PowerShell script that uses the Azure REST API to automatically:

- Query all accessible subscriptions

- Fetch usage-based cost data for a given time range

- Export it into a clean Excel report

And I made it smart enough to handle throttling too. Here’s how it all came together.

Goals

- Pull Azure cost data from multiple subscriptions

- Offer flexible time range selection (this month, last month, custom, etc.)

- Authenticate securely with Entra ID (Service Principal)

- Export to Excel in a way leadership can digest (bonus points if it opens without errors)

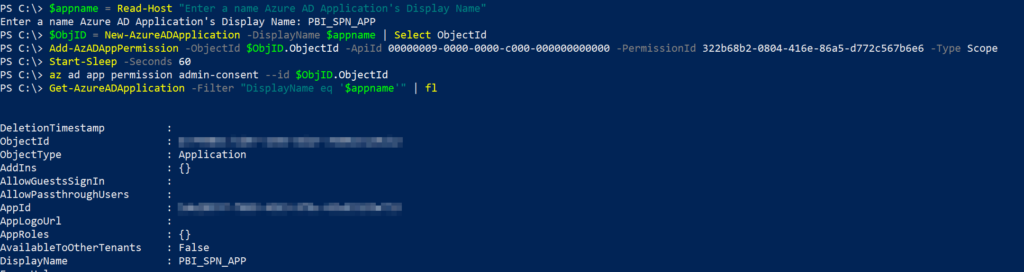

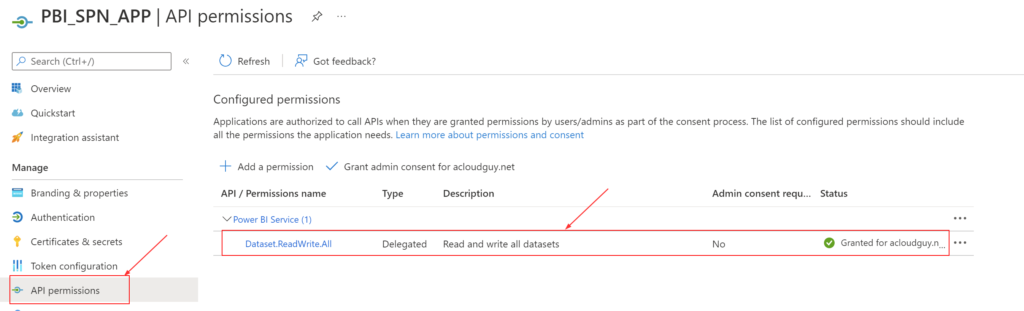

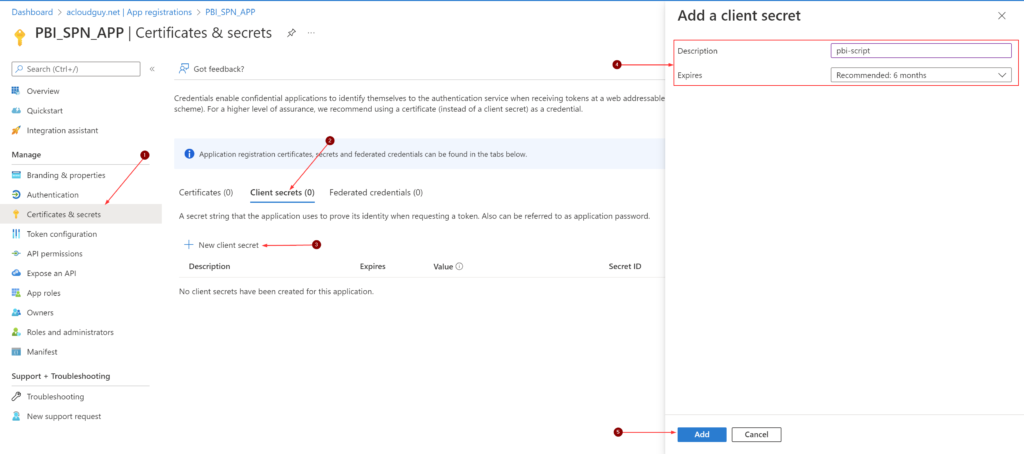

Authentication with Entra ID

I created a Service Principal and assigned it the “Global Billing Reader” role at the billing account level. The script uses the client_credentials flow to authenticate and obtain an access token.

Yes, I temporarily stored the client secret in a plain text variable $clientSecretPlain = 'ENTER_SECRET' because I was still prototyping. Don’t judge me. But for production? Vault it or a managed identity.

Handling Throttling (429 Errors)

Azure’s APIs like to throw shade when you hit them too hard. I added retry logic with exponential backoff and jitter.

PowerShell Script

# Author: Kumaran Alagesan

# Requires: Az CLI, ImportExcel module (Install-Module -Name ImportExcel)

# Authenticate using Entra Application (Service Principal)

$clientId = 'ENTER_APP_ID'

$tenantId = 'ENTER_Tenant_ID'

$clientSecretPlain = 'ENTER_SECRET'

# Get access token using Service Principal

$body = @{

grant_type = "client_credentials"

client_id = $clientId

client_secret = $clientSecretPlain

scope = "https://management.azure.com/.default"

}

$tokenResponse = Invoke-RestMethod -Method Post -Uri "https://login.microsoftonline.com/$tenantId/oauth2/v2.0/token" -Body $body -ContentType "application/x-www-form-urlencoded"

if (-not $tokenResponse.access_token) {

Write-Host "Failed to acquire token. Check credentials." -ForegroundColor Red

exit 1

}

$token = @{ accessToken = $tokenResponse.access_token }

$selection = $null

while (-not $selection) {

$selection = Read-Host "Select time range: `n1) This month`n2) Last month`n3) This quarter`n4) Last quarter`n5) This year`n6) Last 6 months`n7) Last 12 months`n8) Custom`nEnter number"

if ($selection -notmatch '^[1-8]$') {

Write-Host "Invalid selection. Please enter a number from the list (1-8)." -ForegroundColor Yellow

$selection = $null

}

}

$today = Get-Date

switch ($selection) {

'1' { # This month

$startDate = Get-Date -Year $today.Year -Month $today.Month -Day 1

$endDate = $today

}

'2' { # Last month

$lastMonth = $today.AddMonths(-1)

$startDate = Get-Date -Year $lastMonth.Year -Month $lastMonth.Month -Day 1

$endDate = (Get-Date -Year $lastMonth.Year -Month $lastMonth.Month -Day 1).AddMonths(1).AddDays(-1)

}

'3' { # This quarter

$quarter = [math]::Ceiling($today.Month / 3)

$startMonth = (($quarter - 1) * 3) + 1

$startDate = Get-Date -Year $today.Year -Month $startMonth -Day 1

$endDate = $today

}

'4' { # Last quarter

$currentQuarter = [math]::Ceiling($today.Month / 3)

if ($currentQuarter -eq 1) {

$lastQuarterYear = $today.Year - 1

$lastQuarter = 4

} else {

$lastQuarterYear = $today.Year

$lastQuarter = $currentQuarter - 1

}

$startMonth = (($lastQuarter - 1) * 3) + 1

$startDate = Get-Date -Year $lastQuarterYear -Month $startMonth -Day 1

$endDate = (Get-Date -Year $lastQuarterYear -Month $startMonth -Day 1).AddMonths(3).AddDays(-1)

}

'5' { # This year

$startDate = Get-Date -Year $today.Year -Month 1 -Day 1

$endDate = $today

}

'6' { # Last 6 months

$startDate = $today.AddMonths(-5)

$startDate = Get-Date -Year $startDate.Year -Month $startDate.Month -Day 1

$endDate = $today

}

'7' { # Last 12 months

$startDate = $today.AddMonths(-11)

$startDate = Get-Date -Year $startDate.Year -Month $startDate.Month -Day 1

$endDate = $today

}

'8' { # Custom

$startDate = Read-Host "Enter start date (yyyy-MM-dd)"

$endDate = Read-Host "Enter end date (yyyy-MM-dd)"

try {

$startDate = [datetime]::ParseExact($startDate, 'yyyy-MM-dd', $null)

$endDate = [datetime]::ParseExact($endDate, 'yyyy-MM-dd', $null)

} catch {

Write-Host "Invalid date format. Exiting." -ForegroundColor Red

exit 1

}

}

}

$startDateStr = $startDate.ToString("yyyy-MM-dd")

$endDateStr = $endDate.ToString("yyyy-MM-dd")

# Set headers for REST calls using the service principal token

$headers = @{

'Authorization' = "Bearer $($token.accessToken)"

'Content-Type' = 'application/json'

}

# Get all subscriptions

$subsUrl = "https://management.azure.com/subscriptions?api-version=2020-01-01"

$subscriptions = Invoke-RestMethod -Uri $subsUrl -Headers $headers -Method Get | Select-Object -ExpandProperty value

Write-Host "Fetching cost data for $($subscriptions.Count) subscriptions: " -NoNewline

$totalCost = 0

$results = @()

foreach ($sub in $subscriptions) {

$costQueryBody = @{

type = "Usage"

timeframe = "Custom"

timePeriod = @{

from = $startDateStr

to = $endDateStr

}

dataSet = @{

granularity = "None"

aggregation = @{

totalCost = @{

name = "Cost"

function = "Sum"

}

}

}

} | ConvertTo-Json -Depth 10

$costUrl = "https://management.azure.com/subscriptions/$($sub.subscriptionId)/providers/Microsoft.CostManagement/query?api-version=2024-08-01"

$maxRetries = 7

$retryDelay = 5

$attempt = 0

$success = $false

while (-not $success -and $attempt -lt $maxRetries) {

try {

$costData = Invoke-RestMethod -Uri $costUrl -Headers $headers -Method Post -Body $costQueryBody

$subscriptionCost = 0

if ($costData.properties.rows -and $costData.properties.rows.Count -gt 0) {

$subscriptionCost = $costData.properties.rows[0][0]

}

$results += [PSCustomObject]@{

'Subscription Name' = $sub.displayName

'Total Cost' = [math]::Round([double]$subscriptionCost, 2)

}

$totalCost += $subscriptionCost

Write-Host "." -NoNewline

$success = $true

}

catch {

if ($_.Exception.Response.StatusCode.value__ -eq 429 -and $attempt -lt ($maxRetries - 1)) {

# Add random jitter to delay

$jitter = Get-Random -Minimum 1 -Maximum 5

$sleepTime = $retryDelay + $jitter

Write-Host "`n429 received, retrying in $sleepTime seconds..." -ForegroundColor Yellow

Start-Sleep -Seconds $sleepTime

$retryDelay *= 2

$attempt++

}

else {

Write-Host "x" -NoNewline

Write-Host "`nError getting cost for subscription $($sub.displayName): $($_.Exception.Message)" -ForegroundColor Red

$success = $true

}

}

}

}

# Export results to Excel

$excelPath = Join-Path -Path $PSScriptRoot -ChildPath ("AzureCostReport_{0}_{1}.xlsx" -f $startDateStr, $endDateStr)

if ($results.Count -gt 0) {

# Do not pre-format 'Total Cost' as string; keep as number for Excel formatting

# Check if file is locked

$fileLocked = $false

if (Test-Path $excelPath) {

try {

$stream = [System.IO.File]::Open($excelPath, 'Open', 'ReadWrite', 'None')

$stream.Close()

} catch {

$fileLocked = $true

}

}

if ($fileLocked) {

Write-Host "Excel file is open or locked: $excelPath. Please close it and run the script again." -ForegroundColor Red

} else {

$results | Export-Excel -Path $excelPath -WorksheetName 'CostReport' -AutoSize -TableName 'CostSummary' -Title "Azure Cost Report ($startDateStr to $endDateStr)" -TitleBold -ClearSheet

Write-Host "Excel report saved to: $excelPath"

# Optionally open the file

if ($IsWindows) {

Start-Sleep -Seconds 2

Invoke-Item $excelPath

}

}

}If you want to email the output as a table in the body to a mailbox, you can replace the ‘Export results to Excel’ section with the code below. Yup! I know Send-MailMessage is obsolete and ideally I’d run this script with in an Azure automation account and set app permissions for the identity to be able to send emails. I’ll cover it in a later post.

# Prepare HTML table for email

if ($results.Count -gt 0) {

# Add $ symbol to each Total Cost value

$resultsWithDollar = $results | ForEach-Object {

$_ | Add-Member -NotePropertyName 'Total Cost ($)' -NotePropertyValue ('$' + [math]::Round([double]$_.('Total Cost'), 2)) -Force

$_

}

$htmlTable = $resultsWithDollar | Select-Object 'Subscription Name', 'Total Cost ($)' | ConvertTo-Html -Property 'Subscription Name', 'Total Cost ($)' -Head "<style>table{border-collapse:collapse;}th,td{border:1px solid #ccc;padding:5px;}</style>" -Title "Azure Cost Report"

$htmlBody = @"

<h2>Azure Cost Report ($startDateStr to $endDateStr)</h2>

$htmlTable

<p><b>Total Cost (all subscriptions):</b> $([string]::Format('${0:N2}', [math]::Round([double]$totalCost,2)))</p>

<p style='color:gray;font-size:small;'>This is an automatically generated email - Please do not reply.</p>

"@

# Email parameters (update these as needed)

$smtpServer = "smtpserver@domain.com"

$smtpPort = 587

$from = "alerts@domain.com"

$to = "emailaddress@domain.com"

$subject = "Azure Cost Report ($startDateStr to $endDateStr)"

Send-MailMessage -From $from -To $to -Subject $subject -Body $htmlBody -BodyAsHtml -SmtpServer $smtpServer -Port $smtpPort

Write-Host "Cost report sent via email to $to"

} else {

Write-Host "No results to send."

}What You’ll Get

The final Excel report displays each subscription’s name alongside its total cost for your chosen time period. Whether you’re reviewing it manually or feeding it into FinOps tools, the format is designed for quick analysis and clean presentation.

Practical Applications

| Scenario | How It Helps |

|---|---|

| Automation and scheduling | Supports routine reporting via scheduled tasks or DevOps flows |

| Multi-subscription environments | Consolidates cost data across departments or teams |

| Governance and FinOps | Enables proactive budget tracking and reporting |

With just a PowerShell script and the Azure Cost Management API, you can unlock instant insights into your cloud spend across all Azure subscriptions. Whether you’re part of a DevOps team, driving FinOps initiatives, or simply managing cloud budgets, this automation makes cost visibility one less thing to worry about.

Lessons Learned

- Azure Cost Management API is powerful, but throttling is real.

- Microsoft will be retiring the Consumption Usage Details API at some point in the future and does not recommend that you take a new dependency on this API.

- Export-Excel is a lifesaver, especially when you want your report to actually be readable.

Room for Improvement

- Add Azure MeterCategory per subscription in the email report to give a better idea of where the cost usage is

- Move secrets to Azure Key Vault or use Managed Identity

- Add monthly trend analysis and forecasting

- Push the data to Power BI for richer dashboards

Final Thoughts

This script is now my go-to tool for quickly generating Azure cost reports across environments. It’s flexible, reliable, and gives my leadership team the visibility they need to make informed decisions, without logging into the portal.

Because let’s face it: if you’re managing Azure at scale, you shouldn’t be clicking through billing blades. You should be scripting your way to clarity.

Keep those costs in check, one API call at a time.

Thanks for stopping by. ✌